No one knew that common sketches of owls, gardens, yoga etc. can one day be useful for Google’s own teaching purpose? Google take examples from this common art in order to teach its AI to mimic the way humans draw. Sketch-RNN is the latest project; Google is working on in order to build its neural network system.

This neural network system has learned to draw by looking at the sketches of 5.5 million sketches by people that were collected by Google’s game Quick, Draw! This game supplied users with a word which they were supposed to sketch online. A neural network was applied to the game in order to learn how the human brain works when identifying what users were trying to sketch.

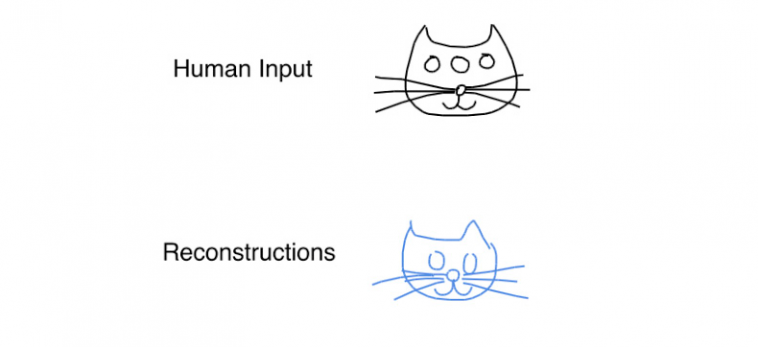

After learning from those examples of sketches, Google’s AI has now become capable of drawing the basic representation of cats, trucks etc. when presented with hand drew sketches. This does not mean that the AI network just mimics whatever it’s fed. The neural network first recognizes what the input relates to and then tries to create an exclusive doodle. This sketching is based upon the knowledge of the neural network about each object.

Following is an example of a sketch created by Sketch-RNN when it was given to draw a cat’s face.

The interesting thing about Google’s Sketch-RNN is that it can even draw without the assistance of a starting sketch. It can also complete incomplete sketches by humans.

Google seems to be working a lot on upgrading its all features in order to provide users with unique experiences. This time, it has been successful in training a machine to draw and generalize abstract concepts similar to humans even when the sketches are not exactly photorealistic. This can lead to a great advancement for more interesting applications.

The full paper is available here (PDF).

Via: The Next Web