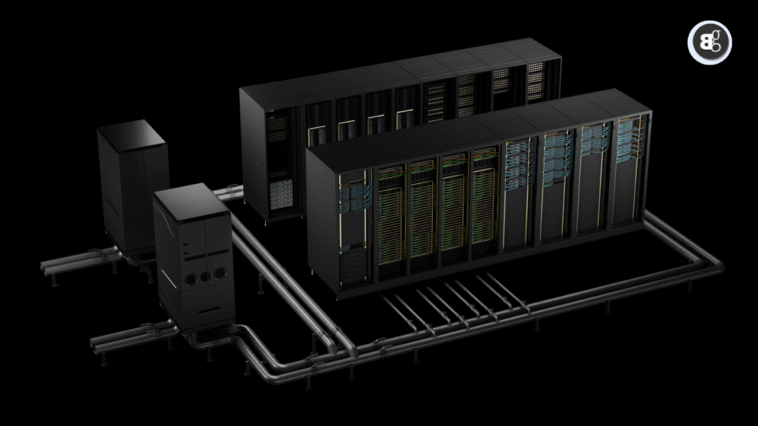

Artificial intelligence is growing so quickly that even the biggest data centres are starting to feel cramped. These massive facilities need endless racks of GPUs, enormous power supplies, and advanced cooling systems just to keep up with today’s AI models. But what happens when one building simply isn’t enough?

That’s exactly the problem NVIDIA says it wants to solve. Ahead of Hot Chips 2025, the company announced its new Spectrum XGS Ethernet technology, designed to connect AI data centres across long distances and make them act like one giant supercomputer. NVIDIA calls this idea “giga-scale AI super-factories.”

Smarter Scaling with NVIDIA

Traditionally, AI companies have had two ways to scale: scaleup (make chips more powerful) or scale out (add more processors in the same location). But when physical space, power grids, and cooling capacity run out, those approaches hit a wall. NVIDIA’s “scale across” solution is meant to bridge that gap by linking multiple sites together, allowing them to work as a single system without the usual headaches of latency, bottlenecks, or unpredictable performance.

The technology comes with some clever upgrades, including distance aware algorithms, advanced congestion control, and real time monitoring to keep data flowing smoothly between facilities. In simple terms, it’s like giving AI data centres a high speed, perfectly managed highway system instead of relying on bumpy local roads.

CoreWeave Steps In to Test NVIDIA’s Scale Across Tech

CoreWeave, a cloud infrastructure company specializing in GPU computing, has already signed up as one of the first to test this technology. If it works, CoreWeave could link its data centres into a seamless mega cluster, giving customers access to far greater AI power than any single site could offer.

Of course, there are still challenges. Physics can’t be ignored the speed of light and the quality of global internet infrastructure will always set limits. Plus, managing distributed data centres involves more than just networking: issues like data synchronization, fault tolerance, and cross-border regulations all come into play.

Still, the bigger picture is clear: as AI demands continue to explode, companies can’t rely forever on building larger and larger single facilities. NVIDIA’s Spectrum XGS could mark a shift toward distributed AI super-factories, where power is spread across locations but feels like one unified machine.

For users, that could mean faster AI services, smoother experiences, and maybe even lower costs down the line. For the industry, it’s a glimpse of how the next generation of AI infrastructure might be built not bigger, but smarter.

Summary

NVIDIA has introduced Spectrum-XGS Ethernet to link multiple AI data centres into one giant “giga-scale super-factory.” This new “scale across” approach goes beyond traditional scale-up or scale-out by connecting facilities seamlessly.

The technology uses distance-aware algorithms and congestion control to reduce latency and bottlenecks.

If successful, it could reshape AI infrastructure, enabling faster, smarter, and more distributed supercomputing.