If there’s one thing 2026 is teaching us, it’s that technology alone can’t define the future values do. Artificial Intelligence is changing how we live, work, and even think, but the real revolution isn’t about machines getting smarter. It’s about us learning how to use them responsibly.

AI has gone from something exciting to something that affects every corner of society jobs, art, law, politics, even relationships. The question is no longer “What can AI do?” but “What should AI do?”

Copyright Battle: Fairness in the Age of AI

Let’s start with one of the messiest issues AI and creativity. Artists, writers, and filmmakers are asking a simple but powerful question: if AI uses their work to learn, shouldn’t they get credit or at least a cut?

In 2025 alone, more than a dozen major lawsuits were filed by artists and authors against AI companies for using their content without permission. Platforms like OpenAI and Stability AI faced backlash for “training” on copyrighted work. Some court rulings have favored AI companies; others have sided with creators.

Read More: AI and Human Creativity 3 Smart Business Strategies for Smarter Growth

The good news? Many experts believe 2026 might be the year we see a fair middle ground where innovation and creativity finally learn to coexist.

AI Agents and the Fear of Losing Control

Now comes another big question: how much control are we willing to give AI?

AI “Agents” can already take actions, manage tasks, and make decisions with little to no human input. Sounds convenient, right? But what happens when things go wrong who takes the blame?

Regulators in the U.S. and Europe are now discussing new “AI responsibility laws,” making sure every machine has a human answerable for its decisions. Because if no one is accountable, trust in AI will crumble fast.

See More: Study Raises Alarms on AI Chatbots Giving Suicide Advice

Jobs on the Line: The Human Cost of Progress

AI has already replaced people in some industries. According to Goldman Sachs, automation could impact over 300 million jobs globally. Clerical roles and customer service jobs have taken the hardest hit.

But this isn’t the end of the story. Across countries, businesses are focusing on reskilling rather than replacing. Many companies now offer AI-related training to help employees move into creative or technical roles. Governments, too, are stepping in with tax breaks for companies that invest in upskilling programs.

Ethically speaking, if AI saves money by replacing workers, that money should go back into helping people rebuild their careers. It’s not just fair it’s necessary.

Read More: AI in the Courtroom: Will Technology Replace Lawyers or Redefine Justice?

Global Standards: A Divided Map

AI doesn’t respect borders but laws do. That’s why regulation remains fragmented.

- The EU has passed the world’s first AI Act, setting strict guidelines on transparency and accountability.

- China is enforcing laws focused on data control and national security.

- The U.S., however, still lacks a unified federal AI law, leaving states to make their own rules.

Experts warn that without global coordination, we risk creating “AI safe havens” where companies operate without oversight. In 2026, one of the biggest challenges will be building a shared ethical framework across nations.

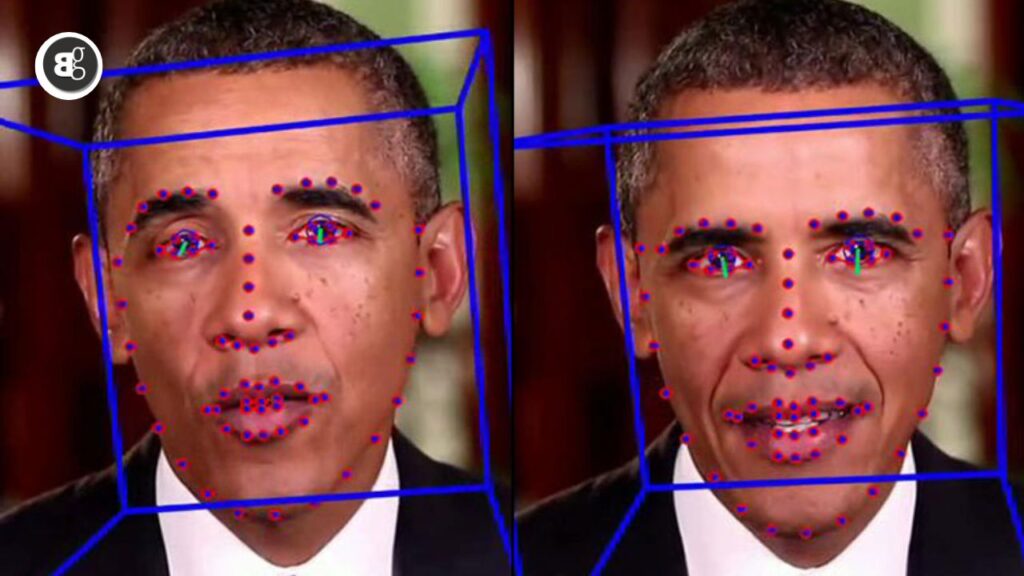

Fighting Deepfakes and Misinformation

Remember when the internet was about connecting people? Now, it’s about figuring out who’s even real.

AI-generated deepfakes are flooding social media fake videos, cloned voices, and fabricated political messages. They look real, sound real, and can easily manipulate public opinion.

Governments in South Korea, the EU, and the U.S. are pushing new rules that require labels on AI-generated content. Meanwhile, tech platforms are testing watermarking systems to verify what’s real. But no law can replace human awareness. In 2026, the smartest users will be those who question what they see.

Transparency and the “Black Box” Problem

There’s one more big problem we don’t always understand how AI makes decisions.

From approving bank loans to filtering job applications, algorithms decide the fate of millions daily. Yet their reasoning is hidden inside a “black box.”

Experts call for Explainable AIsystems that show why they make certain choices. Think of it like a car dashboard for algorithms: transparent, measurable, and human readable. Without that, AI risks losing the one thing it needs most trust.

The Human Future of Artificial Intelligence

The truth is, AI doesn’t have morals. We do.

What makes AI ethical isn’t the code it’s the conscience behind the people building and using it. Companies that treat ethics as a checklist will fall behind. Those that make fairness and transparency part of their DNA will lead the future.

In 2026, the real winners won’t just be tech giants or startups they’ll be the humans who remember that progress without purpose isn’t progress at all.