Elon Musk’s xAI has taken a dramatic leap in the AI arms race with the launch of Colossus 2, the world’s first gigawatt-scale AI training supercluster. Unveiled on Friday, the system represents a major acceleration in compute infrastructure, putting xAI well ahead of rivals such as OpenAI and Anthropic, which are not expected to reach a similar scale until 2027 or later.

The driving force behind Colossus 2 is simple but ambitious: deliver the massive computational power required to train next-generation AI models, including Grok 4. As models grow larger and more complex, access to energy and hardware has become the true bottleneck, and xAI is moving aggressively to remove it.

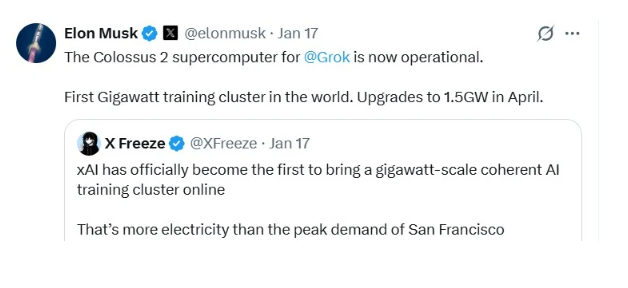

Built at record speed, Colossus 2 relies on on-site gas turbines paired with Tesla Megapacks, allowing xAI to bypass traditional grid constraints. At its current capacity, the cluster consumes as much electricity as San Francisco at peak demand. xAI has already announced plans to scale the system further, targeting 1.5 gigawatts by April, with longer-term ambitions pushing even higher.

The rapid deployment has drawn mixed reactions. NVIDIA CEO Jensen Huang publicly praised the achievement, calling it a landmark moment for large-scale AI training. At the same time, environmental activists and local groups have raised concerns about pollution and energy use, particularly in South Memphis neighborhoods near the facility.

While competitors are still drafting multi-year infrastructure roadmaps, xAI is already operating at major-city power levels today. The speed of execution is striking:

- Colossus 1 went from initial build to full operation in just 122 days

- Colossus 2 has already crossed the 1-gigawatt threshold, with a path toward 2 gigawatts

Musk’s strategy itself isn’t entirely new, but the pace is. By prioritizing vertical integration, rapid construction, and direct energy sourcing, xAI is moving faster than any other major AI lab.

With Colossus 2, xAI has set a new benchmark for hardware density, power consumption, and AI-scale ambition. The project signals a shift in how AI companies interact with energy grids, infrastructure planning, and environmental trade-offs, marking a defining moment in the era where computing power may matter more than algorithms themselves.